Fitting AI models in your pocket with quantization - Stack Overflow

4.8 (577) In stock

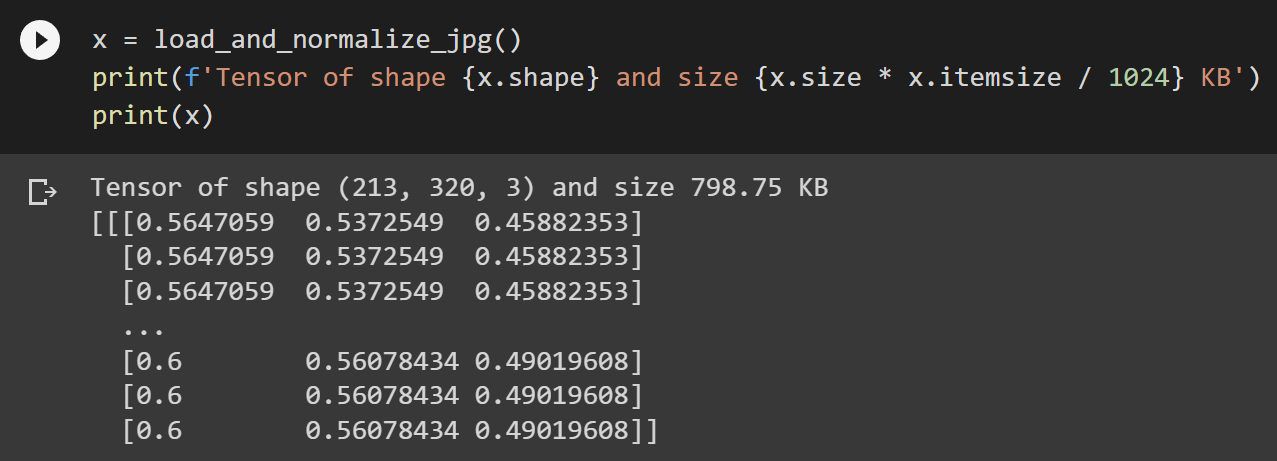

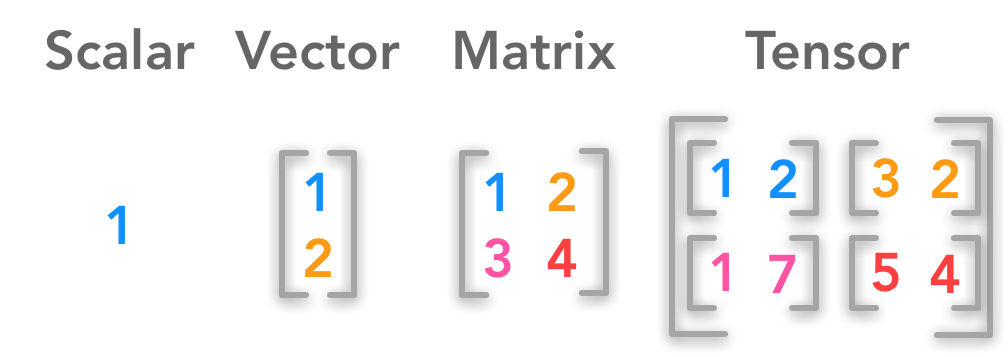

Getting your data in shape for machine learning - Stack Overflow

Running LLMs using BigDL-LLM on Intel Laptops and GPUs – Silicon Valley Engineering Council

Fitting AI models in your pocket with quantization - Stack Overflow

deep learning - QAT output nodes for Quantized Model got the same min max range - Stack Overflow

Getting your data in shape for machine learning - Stack Overflow

neural network - Does static quantization enable the model to feed a layer with the output of the previous one, without converting to fp (and back to int)? - Stack Overflow

What is your experience with artificial intelligence, and can you

Ronan Higgins (@ronanhigg) / X

Preparing For Interaction To Next Paint, A New Web Core Vital, Smashing Magazine

Introduction to AI Model Quantization Formats, by Gen. David L.

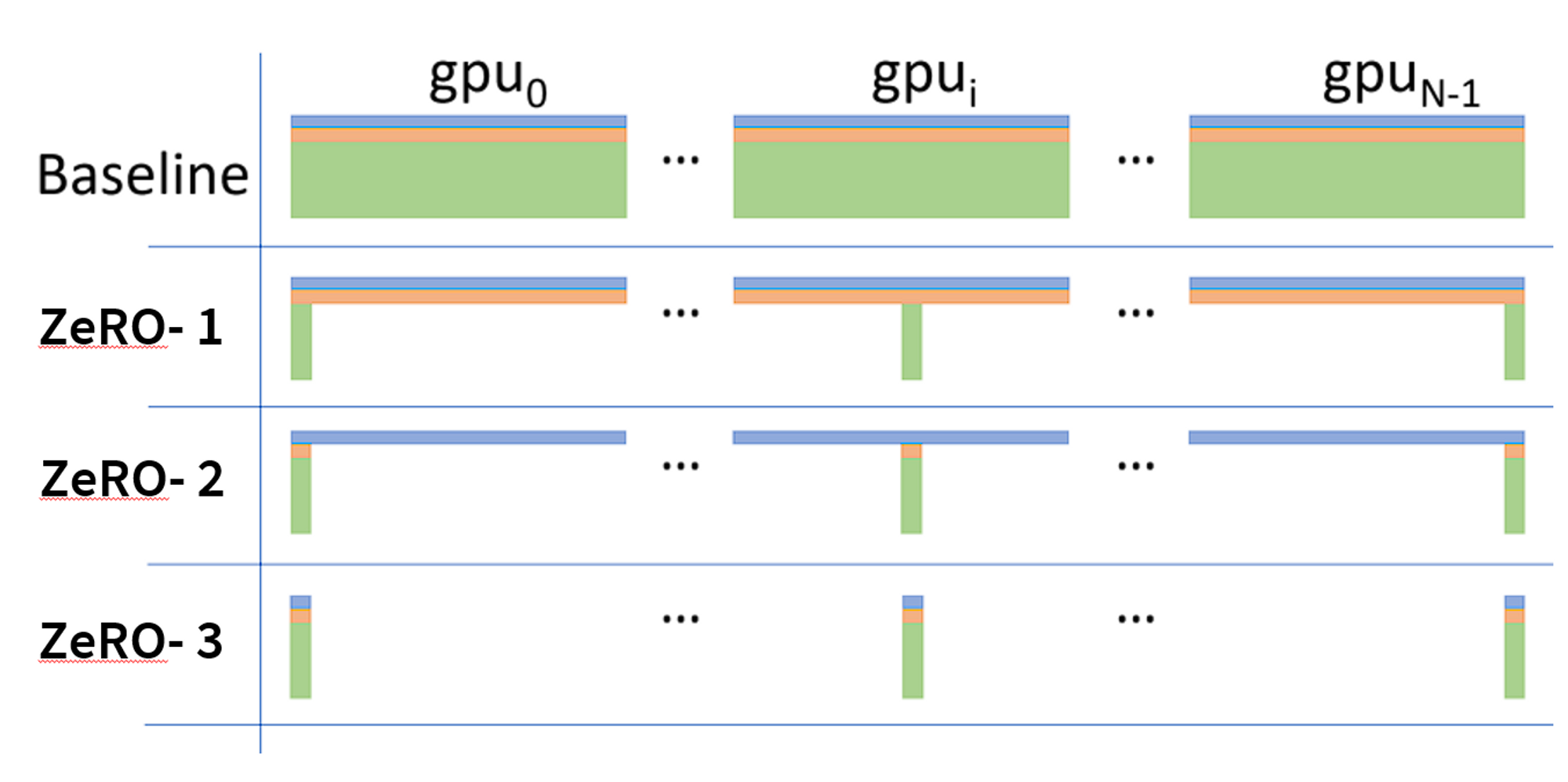

The Mathematics of Training LLMs — with Quentin Anthony of Eleuther AI

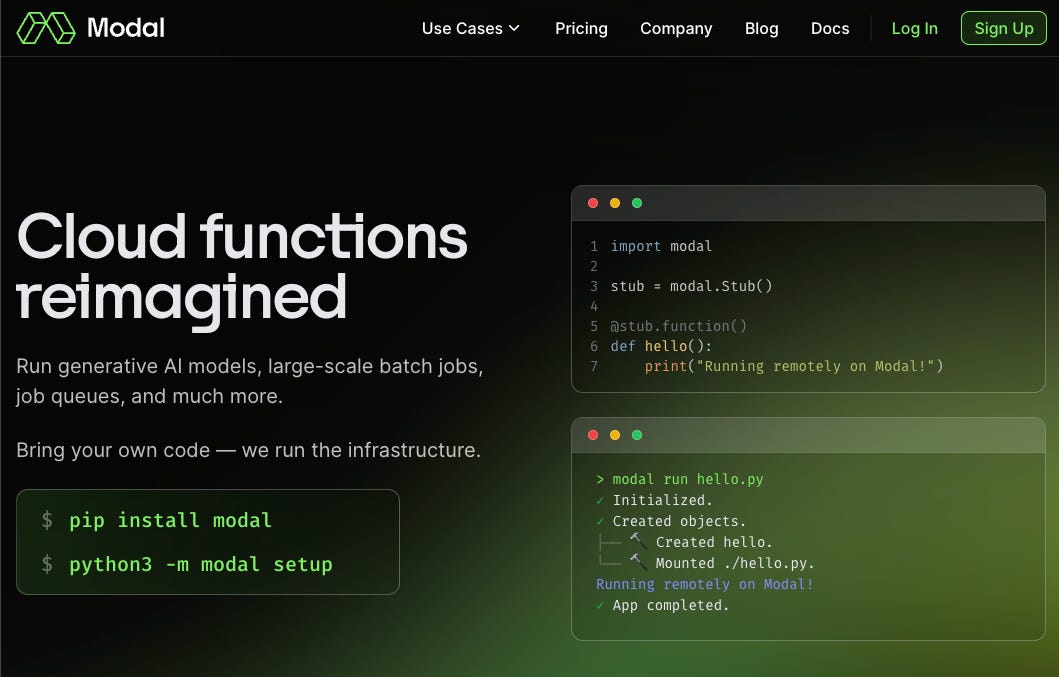

Truly Serverless Infra for AI Engineers - with Erik Bernhardsson of Modal

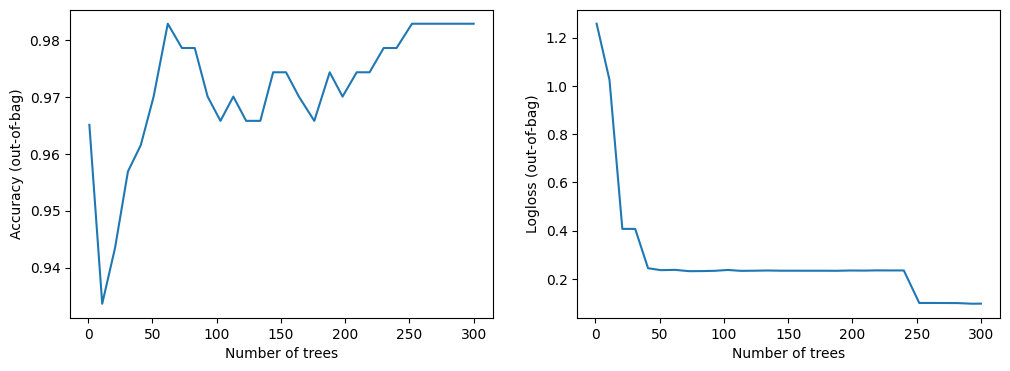

Build, train and evaluate models with TensorFlow Decision Forests

Getting your data in shape for machine learning - Stack Overflow

Improving INT8 Accuracy Using Quantization Aware Training and the

Pocket-rocket Definition & Meaning

dance.m0mz_ (@dance.m0mz_)'s videos with original sound - dance

Pocket Rockets In Poker: Meaning, How To Play, & Strategy

The best camping stoves of 2024, tried and tested by an expert in

The Fundamental Pant

The Fundamental Pant Full Busted Figure Types in 34G Bra Size Sand Comfort Strap

Full Busted Figure Types in 34G Bra Size Sand Comfort Strap BLACROSS 30 Inch 13x6 Deep Wave Lace Front Wigs Human Hair 180 Density Deep Part Curly Lace Front Wigs Human Hair Pre Plucked Glueless Transparent Lace Frontal Wig Pre Plucked with

BLACROSS 30 Inch 13x6 Deep Wave Lace Front Wigs Human Hair 180 Density Deep Part Curly Lace Front Wigs Human Hair Pre Plucked Glueless Transparent Lace Frontal Wig Pre Plucked with HBO Max Pulls Nearly 200 'Sesame Street' Episodes - The New York Times

HBO Max Pulls Nearly 200 'Sesame Street' Episodes - The New York Times SPANX Hollywood Socialight Cami Stellar Blue Sz XL NWT

SPANX Hollywood Socialight Cami Stellar Blue Sz XL NWT Conturve What is the best shapewear to wear under jeans?

Conturve What is the best shapewear to wear under jeans?